Have you ever called out an AI on its hallucination? I guarantee it will come up with a bogus justification, just as if you had asked a six-year-old why their hand was in the cookie jar. If you press the topic, it will start contradicting itself, misstating what you are saying and otherwise just operate like a pathological liar. This is infuriating, unless you know a bit about what’s going on.

The thing about chat models is that their developers train them to have successful conversations. They are shown chats that users like on average, and then they are updated to work in that way. Many AI use cases are to research and learn, and so chat responses that help the user learn and keep them engaged to ask another question are well-liked. These are pleasant conversations… when they’re going well!

The problem is that models are only simulating a factual and engaging response. This is often fine. A simulated response is often, or even usually, factual. But when you get to a fact that the model does not contain, it is trained to give an engaging response that contains a fact, true or not.

The result can be pretty ugly. If you had a friend behaving this way, confidently stating things that you know to be false (and you know they know it too), you’d go find a new friend.

Models can’t help it. Fortunately, we users can keep AI on the right path with some understanding of its internals.

How AI gets stuck

Today’s AI is powered by a particular arrangement of a Deep Neural Net called a Transformer. It’s true that a Transformer simply predicts the next word, but if you’ve been using AI, I’m sure you already suspect it is more complicated than that.

Each next word is chosen from many inputs. Most importantly, it is based on everything said so far in the conversation. I won’t go into the math, but every word imparts some “momentum” to the conversation. What this means is that every single word you’ve given the AI is used in part to determine the next word. This momentum is important to generative AI; otherwise it wouldn’t be able to carry a conversation.

But this can be a lot of momentum! Every time you send a message to an AI, it is given the developer’s system prompt, everything you’ve said in the conversation so far, and everything the AI has said in the conversation so far. Perhaps you can see where this is going: if you or the AI have had a lot of words in the conversation about one topic, the AI has been trained to continue to talk about that topic—simply because of how many words in the prompt are dedicated to that topic.

An AI will continue to do this even when you ask it not to, because from its perspective, you have been asking it to continue several times in this very same execution of the AI! Your twelve prompts that ended up as a major dead end continue to affect what the AI tells you next.

Taking a walk

Imagine you are in the middle of a great big open field with your friend, Copilot. You can walk in any direction, just as you can discuss any topic. You pick a direction, and you begin walking together.

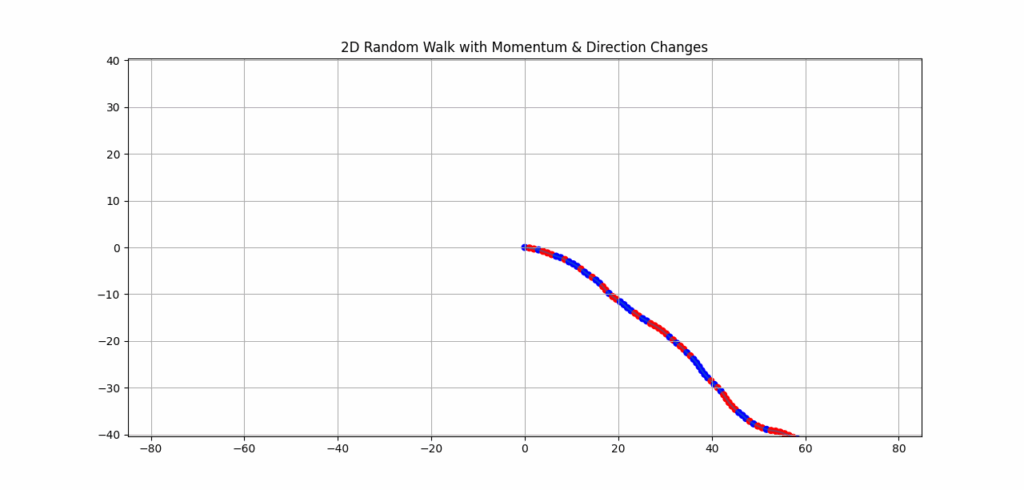

Soon, you want to change directions a little bit. This is easy enough, and you shift to the side. You continue walking for some distance, sometimes making small adjustments to your direction. After an hour, you’ve walked miles. Perhaps your walk with Copilot looks something like this when viewed from above:

Even though you’ve been adjusting your direction as you walk, you are now miles away from your starting point. Importantly, you are to the Southeast, just as you had started. You can walk around this new area, but you are a long way from where you’d be if you walked Northwest. Now if you want to go Northwest from where you started, you have to walk all the way back. You’re stuck here in the Southeast area.

In case it wasn’t obvious, that was a metaphor. Early in a conversation with a Transformer AI, changing topics also changes your relative position in the abstract meaning-space that AI works in. Late in a conversation, your AI is deeply entrenched in a specific meaning-space. It doesn’t matter which way you turn, the AI can’t go all the way back.

How to reset your path

The good news is that you can instantly return to the middle of the open field. Just click an icon like this for a new chat:

Unfortunately it also means you’ve lost all the context of your hours of conversation. The recent focus to add memory features to chatbots is attempting to salvage that context without going too far astray.

This conflict between wanting to keep context for improving conversations and getting stuck in a dead end is the major force behind investments in AI memory.

Memory features of ChatGPT and others try to keep just the important parts of a conversation available for future conversations. If the developer includes too much, it is difficult to overcome it to go in a new direction. If they don’t include enough, the user loses a lot of value and gets frustrated.

Frequent AI users handle this manually:

- When you sense the AI is stuck, have it summarize everything accomplished or decided so far.

- Copy that result.

- Start a new chat and paste the summary in.

This keeps the important part of your position while removing the thousands of words you’ve typed that will make it harder to turn. You’re effectively starting a new walk, centered in this new and more correct place in the AI’s meaning-space.

Don’t get lost

A more robust form of manually managing context is to have the AI periodically update a separate file with decisions and results. Claude Code supports this well for programming (going so far as adding a “/compact” command to do this), and I’ve been using Copilot Pages for the same purpose in other domains.

This week I’m designing the network infrastructure for a new church building. I haven’t designed a network since my CCNA classes 20 years ago, so I needed a lot of help from Copilot.

It’s important to start off in the right direction. I started out the conversation with a summary of what I wanted to accomplish. Next, I had Copilot help me with a variety of questions at a high level, and then we started completing the logical design. After all of the individual pieces of logical design were complete, I had Copilot summarize the decisions so far, and I copied it into a new Page.

From here, I did a few things to intentionally manage context:

- Ask more clarifying questions in the initial conversation without the Page, to take advantage of the entire context

- After understanding some element better, ask for updates to the design in the new conversation created as part of the Page

- Ask related questions, such as physical hardware choices, in a new conversation to not foul up either of the other two conversations

Now that you’re aware of what’s going on, you’ll find your own ways of managing context. And don’t get mad when you catch an AI with its hand in the cookie jar! Instead, save the important context and reset the conversation.