We’ve taught software to think. Now we need to teach it to act.

Our industry uses the word “agent” for AI software that can do things, but that isn’t the whole story. There are at least two definitions of “agent,” and they are both important!

The first is a philosophical definition, related to existing human agents: a specialist that does things for you. My real estate agent knows more about real estate than I do, and he searches for and helps me purchase property. I wrote about this definition recently in my article, Why Agents Are the Most Useful Part of AI Chat. In Microsoft 365 Copilot, agents serve as a signpost to help users and specialists of a narrower task.

There is a technical definition that is just as relevant, and it is quickly merging with the philosophical design in AI products.

The technical definition

Researchers have been trying to precisely define the word “agent” for more than 30 years. It’s nearly as difficult to define as “artificial intelligence.” But until recently, the technology was so far away that it was only an academic concern. It’s become a bit of a hot topic lately.

Simon Willison, an open source developer and AI sommelier, has a hobby of coining terms, or sometimes more precisely defining existing terms. His most recent success was “prompt injection,” a topic I’ll have to write about soon. He has been attempting to define “agent” now for a few years, collecting dozens to hundreds of definitions from all over the internet.

I suggested to him a few months ago that most definitions center around either: an LLM calling multiple tools in a loop or taking dynamic steps towards a goal. He’s finally ready to declare a winner:

An LLM agent runs tools in a loop to achieve a goal.

I’m glad he agreed with me and found such a nice way to merge them! Let’s consider why this definition is important.

LLM + Tool + Loop

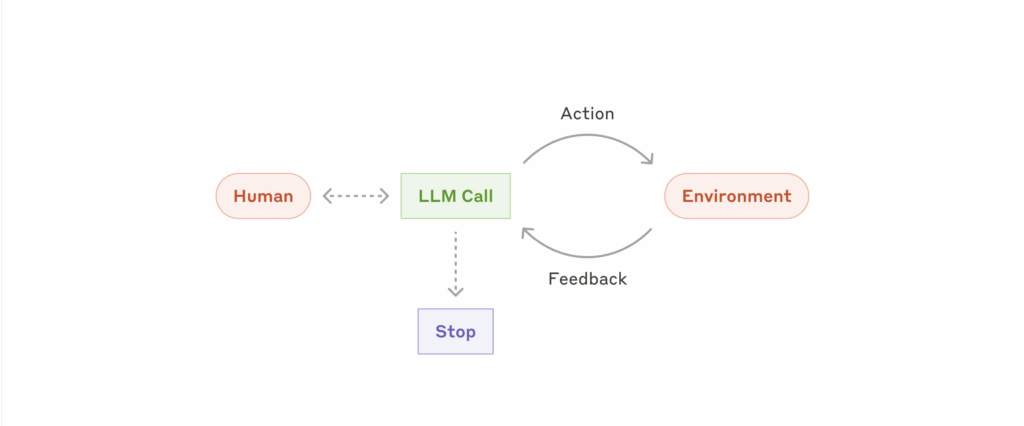

In one of my presentations, I use this diagram from Anthropic:

You can see the parts of Simon’s definition. The “tool” is the action and feedback. Why it is important to “loop” until a Stop condition will require some more explanation.

We’ve been able to write software that can use tools for a long time–even the monitor screen output is a kind of tool. In programming terms, a “tool” is simply the interface for interacting with another piece of software or computer. Frequently, software checks the result as well: did the record save to the database correctly? Good software does different things based on the result of tools like these.

Back in the 1980s, much of the AI field was developing the most complicated tool-calling software possible: expert systems. Expert systems are made up of extremely complicated logic developed by humans and put into software. This software was checking the results of tools and also deciding what action to take next.

It’s this piece of deciding what action to take that is important in this definition of “agent.” Expert systems could make decisions only with the logic that was explicitly programmed ahead of time. Large Language Models turn this on its head. An “agent” can try something, see if it worked, and then decide what to try next. Quite suddenly, software can make decisions on any topic without explicit programming to handle it.

That’s a powerful statement: software can now make its own decisions.

Decide what?

AI like the earliest ChatGPT could not do anything except produce a chat message. It was like a brain without any hands. Modern AI’s first new tool was letting the LLM search the internet in Bing Chat. At first this was making one web search, but it quickly became “agentic search.” The first search results are returned, the LLM reviews the results, and finally decides whether to make another web search. All of that information is used in order to send a message back to you. Results are much better when the AI can iterate on the search and try multiple times.

Agentic search is very effective and solves a big need we have in the world: finding the information we need and synthesizing it. This is a good scenario for agents in Microsoft 365 Copilot too. Using the different search tools (sometimes called knowledge sources) makes a bespoke information-finding and information-synthesizing agent.

When combined with instructions about how to search and how to synthesize, agents that call a search tool in a loop can:

- Get you caught up on a project after vacation

- Prepare some quick notes on the work you accomplished for the day

- Teach you policies and procedures

- Answer FAQ about a product

- Write research reports

- And many more

Agentic search is great, but there is so much more that agents can do when we give them more hands.

“Doing” tools

We often complain that AI can’t perform the simplest tasks in our lives. They can’t check out a digital library book or pair Bluetooth headphones. But once we add tools to an agent, that’s exactly what they can do. Agents can do anything digital in theory, and increasingly agents can do anything digital in practice. Software brains couldn’t do, but all they need are some hands.

When an LLM can call a tool, see the results, and decide what to do next, we can do better than checking out a library book:

- You ask your agent to find a book on the history of the US Immigration system.

- The agent searches Goodreads for what you’ve already read

- Then it searches the internet for the highest-rated books about immigration

- It checks with the library for availability and checks out a book

- It may even write a personalized intro based on its contents, relating it to the books you’ve read before

No AI has built-in integrations with Goodreads, web search, and your local library. But if this sounds like an interesting scenario to you or your customers, you could absolutely set it up. If it’s valuable enough, you could easily build a business around a brain that has the necessary hands to accomplish your goals.

Add tools to your agent

Once you identify what problem your agent should solve, you can and should add the tools your agent needs for its role.

We try to make it as easy as possible to add more tools to your agents in Microsoft 365 Copilot. Even so, it does take intentionality; it isn’t as easy as the retrieval tools we enable as knowledge in Copilot Studio Lite (formerly Agent Builder).

An easy way to add your own tools to an agent is through a low-code platform like Dify or Microsoft Copilot Studio. Copilot Studio’s custom agents support more than 1,400 tools (connectors) out-of-the-box, and they cover just about everything. On the other hand, the vast majority of connectors and APIs available on the internet were mostly set up to be called by pre-configured and deterministic code—not LLMs and agents. You’ll have to do some fiddling with most of them to get them to work well.

Model Context Protocol (MCP) is a partial solution to this problem. MCP isn’t inherently better than the APIs that came before, but they have an advantage: they were all built specifically to be called by agents. They necessarily have full documentation the AI can discover. Not all MCP servers are good quality, but they have a leg up on existing programmatic interfaces. It is very easy to add MCP servers to Copilot Studio, then select the tools you want to add in your agent.

There are still only a handful of MCP servers available over the internet. For everything else, I recommend letting an AI coding agent help. After all, coding agents are some of the most valuable agents out there, and they are great at using tools now! A great way to do this is with TypeSpec in the Microsoft 365 Agents Toolkit. This article is long enough, so I suggest going through this excellent lab exercise. You’ll be a pro in no time.

Agents Need Tools

Searching, retrieving, and synthesizing information is a big deal, just look at Google’s market cap. Agentic search with web search tools is a superpower that is changing the world through deep research tools. Imagine what could happen if we accelerated every other domain to the same degree!

LLMs have the brain already. You choose the hands that make them agents.