For millennia, there was only one thing you could talk to: a human. If you and another person spoke the same language, you could communicate. What does it mean that you can now talk to an AI? And that an AI can talk to an AI?

There are many examples of AI backed by LLMs interacting with humans in the same ways that humans interact with humans. While this is absolutely valuable, I think there is more. AI surpasses human limitations in memory, speed, and now reasoning power. Can’t we go further? The first products with AI talking to other AI are starting to appear now. They are doing so in ways that humans have never communicated.

Let’s explore every pattern of communication that AI is using now as well as what may come next.

One-on-one

Think of how often you have a private conversation with one other person. You may read a bedtime story to your child, tell a joke to your friend, or describe your problems to a therapist. Or at work you brainstorm with a colleague, discuss tasks with your boss, and congratulate an employee on a project milestone.

How powerful this is! One-on-one conversations are the most productive and information-dense way of communicating. Short of writing an entire book, it is the only way to work out and share deep ideas.

It is little wonder that virtually all AI chatbots are implemented as one-on-one conversations! ChatGPT’s first important role was as your conversation partner and intern-level text editor. Character.ai will jump right into intimate conversation or will act as your therapist. Gemini and Copilot make good research or personal assistants through one-on-one conversation.

But this isn’t the only way that we communicate with humans. What else could AI be?

Conference

If you’re an information worker, you know all about meetings. As ineffective as they usually are, they remain the most effective way to collaborate between three-or-more people.

There’s a challenge for an AI when there are multiple people talking: when to respond? The usual AI pattern treats every message as a system prompt; this would be fantastically annoying. AI needs a mechanism of being spoken to, or at least knowing when it would be helpful to respond.

One AI that works in conference with multiple people is Facilitator in Microsoft Teams. It operates in two ways:

- Proactively volunteering specific kinds of information at set points in the meeting. For instance, a few minutes into the meeting, Facilitator will describe the agenda as best as it can tell from the conversation.

- Respond when directly addressed. Teams does this with what we call “at mention.” The user types “@Facilitator” as a signal that Facilitator should respond.

We can imagine an AI agent listening to many people in a conversation, and only sometimes deciding to respond itself. I’m not aware of this being implemented yet, but I expect a reasoning model could decide whether to respond in its reasoning before writing any user-visible tokens. Maybe I’ll try this with o4-mini!

Hand-off (transfer)

Human communication is also sometimes transferred to another person. Often this is unpleasant, such as when a customer service representative hands you off to an even less helpful department. Perhaps a better example is calling an office receptionist, who schedules appointments and transfers your call to the right person for other tasks.

You don’t see this in ChatGPT, but many business website chat support bots have the hand-off pattern. You begin a conversation with an AI, and it later transfers you to a human representative if it can’t solve the problem itself. Personally, I find that’s nearly always the case. Maybe it is because if the answer were straightforward I would have found the information myself. Meanwhile, businesses are sensibly unwilling to let AI give false information to their visitors.

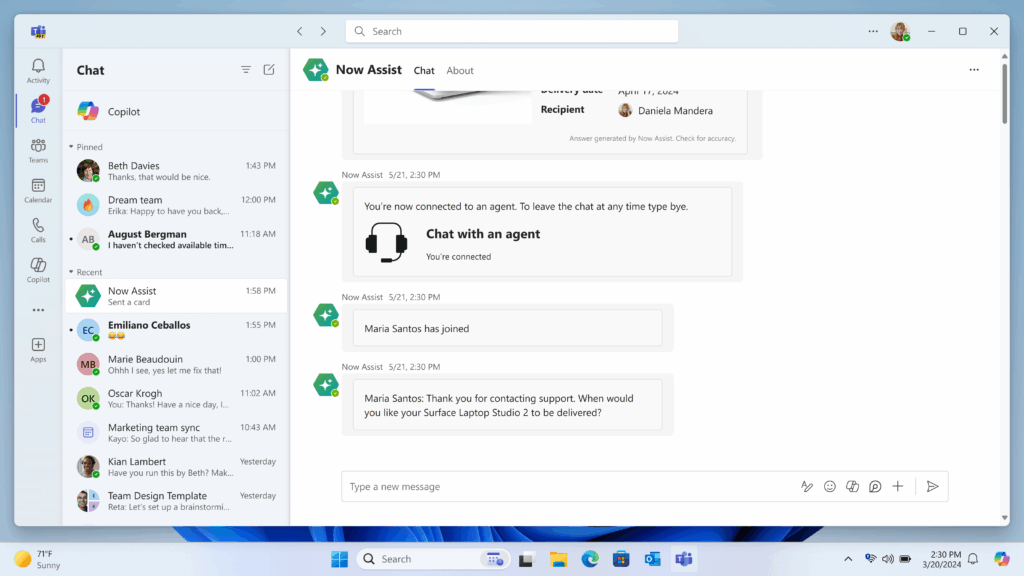

Those examples are all a “blind” hand-off, but there’s also a “supervised” hand-off pattern. We tried this in Microsoft 365 Copilot with ServiceNow. The ServiceNow AI agent would begin a new conversation with the user, itself, and a human representative to be in a three-person conference. The potential advantage of a supervised hand-off is that context of the conversation could be kept, or the AI could continue to help for future tasks.

Sidebar

The AI agent pattern that has me most excited right now is “sidebar.” In episodes of the classic Law & Order series, the prosecutors would often meet with the defendant and the defendant’s attorney. Instead of immediately answering a question, the defendant would check with their attorney to see how they should respond. Perhaps a more common example is when I am asked on the phone to help with some task or attend some function. If I can, I’ll mute my phone and quick check our schedule with my wife!

I believe this pattern has a lot of potential for AI agents. At Microsoft Build last week, we showed a demo of the new Researcher agent using another agent as one of its sources. The way this works is just like how I check our schedule with my wife. Researcher sends a question to the connected agent as if it was a user in a one-on-one conversation. The response from the second agent is used as grounding for the final report, or Researcher can continue to ask more questions.

The power of this is that you don’t need to go talk to each of these agents yourself. You don’t even necessarily need to know which agent has the information you need! We see the same situation among the ultra-rich: they have a head butler to work with the rest of their staff. I believe that this pattern is practically necessary once you move beyond a handful of personal agents.

Pass-through

As far as I’m aware, pass-through conversations exist only in science fiction. This would be where you ask a question to one agent, which routes it to a better agent to respond. It may tell you which entity it is acting as, or it could hide that information.

The closest analog for human communication is routing mail that is addressed to a company office. There can’t be a real-time human pass-through however, because humans cannot instantly become other humans.

It’s interesting to think of how this new-found pattern of communication may be valuable to us. One area that I’m exploring is a baby step in this direction: after the primary agent responds, it can suggest the user ask another agent also. We will see if this is helpful!

Giving it a try

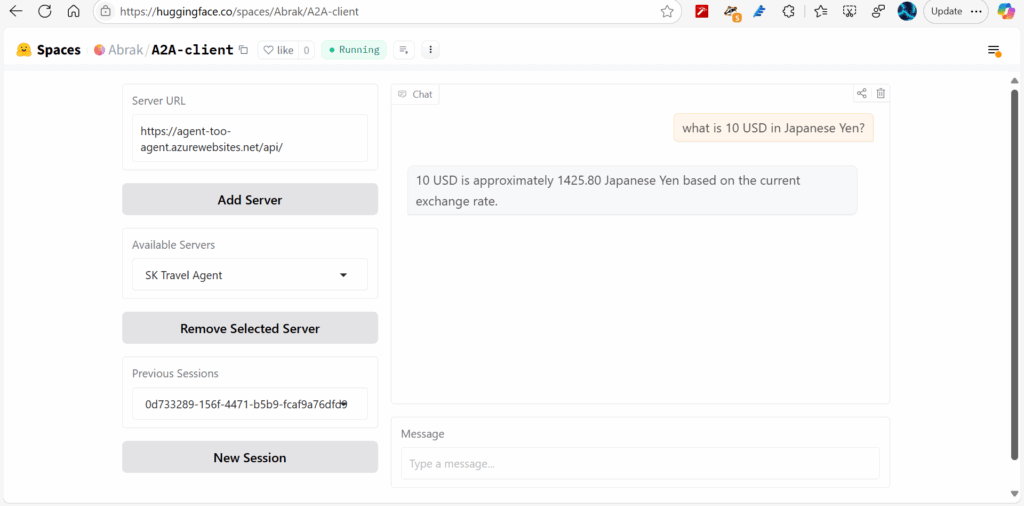

I have built my own test bed for these different patterns of communication. I developed an Agent2Agent server, described in a previous article, and now I’ve added a chat client.

You can use it here: A2A Client – a Hugging Face Space by Abrak. Be sure to click Add Server before you chat; select any public Agent2Agent server. The default server is a Travel Agent, so you can ask for trip planning advice and currency conversions.

For now my client only implements one-on-one conversation—between you and the agent. It does support sessions, although not yet conversation history. My goal is to implement each one of the AI agent communication patterns. Sidebar is the most obvious next step, using Agent2Agent to handle communication, but perhaps it should be the first to implement pass-through?

We’ve gotten used to one-on-one and conference conversations. AI is advancing at breathtaking speed, and it won’t be long before multiple AI agents can collaborate in ways we never could. We will have to adjust quickly. The best thing to do is to try it out. Stay tuned for the next features I add to my Agent2Agent project!

The IETF published a list of communication patterns, inspiring this article: Framework, Use Cases and Requirements for AI Agent Protocols.