Goodnight, orchestrator. Goodnight, harness. Goodnight, application.

If you think ChatGPT is a Large Language Model (LLM), you are sadly mistaken. ChatGPT began as an LLM, but now it is quite a lot more than that.

You may have heard some discussion about M365 Copilot’s advanced orchestrator. Or perhaps you saw in the news that Google had to pull a model out of their AI Studio product because it didn’t have safeguards.

Most folks are confused about this. I’ve been having a lot of conversations recently with customers and partners about the word, “orchestrator,” and everyone means something else by it.

This article will help you understand why there are such strong differences between chat products that are built on the same foundation models. Failing that, you can at least act smugly superior to your colleagues that don’t read my newsletter! We’ll discuss a modern AI chat product’s orchestrator, harness, and application.

The Orchestrator

The first thing to know is that our industry hasn’t decided on a universal definition of LLM orchestration. This may be because each product takes a different approach to it, emphasizing this or that feature. I think most AI engineers would include these functions as orchestration:

- System prompt: If you’ve heard of one bit of chat product internals, it is probably the system prompt. This is the set of instructions to the model that govern its behavior when predicting tokens.

- Decision loop: Few folks realize that modern chat products rarely work like LLM APIs. When you call an LLM directly, your prompt is given directly to the model. Then the model responds directly back to you . Now, AI products have a decision loop. This is a multi-step process where the orchestrator gets one response from the model and decides whether to call it a second time. Products like Researcher in Microsoft 365 Copilot may loop hundreds of times before they return a response to the user.

An iterative loop of Reason → Retrieve → Review for each subtask, collecting findings on a scratch pad until further research would unlikely yield any new information, at which point they would synthesize the final report. –Gaurav Anand on the Microsoft 365 Copilot Blog

- Model routing: OpenAI famously added model routing to their release of GPT-5, although Azure Foundry already had the feature available. OpenAI decides whether a response should be fast or slow but more comprehensive. Based on that, the query will be sent to GPT-5-Chat or GPT-5-Thinking.

- Context management: This is where Retrieval Augmented Generation (RAG) fits in. Before sending a prompt to a model, the orchestrator may make some search requests for related information. Those search results are included in the system prompt so that the LLM is likely to use that information instead of hallucinating its own information. There is a lot of complexity here; the coiner of the term “vibe coding,” Andrej Karpathy, has named this area “context engineering.”

- RAI/filters: Responsible AI (RAI) is a catch-all term for making it difficult to generate unwelcome responses. This includes relatively benign but bad-for-press cursing or potentially dangerous instructions to make bioweapons. The simplest guardrail is a text filter that stops calling the model if the user has any unsafe words in the prompt. Alternately, to cancel and delete the model’s response if it uses any unsafe words.

There’s a lot more to say about each one of these orchestrator features; I could write a blog post about each one. And they are often combined into a whole sequence of calls to LLMs! An overall control flow may dispatch subtasks to several different LLM models, each with a different system prompt and strategy of context management.

The Harness

“Harness” is a relatively new term floating around AI circles. The context I’ve seen it in lately is about coding agents, where there’s a big difference in results depending on what code editing tools you enable for the model. It’s a good word though: a horse’s harness allows it to affect external equipment (like a carriage). I think the word should apply to tool use more broadly than only for coding agents.

We’ve really only found three things that LLMs can do, structurally: write text to the user, write text to itself (thinking), and call a tool. But how does it call a tool like creating a work assignment when it is only predicting words? Simple: it predicts the words, “call create_ticket with parameters Title: write new AI harness”.

The LLM doesn’t actually create the ticket. The harness sees that the model wants to call this tool, calls the tool on the model’s behalf, and then inserts the result into the model’s context as if the model had predicted all those words. This lets the model reason over these results as well.

A harness also includes handling files. If you upload a video file, is it turned into tokens or summarized? Or if you type a URL, is it fetched? When the model generates a CSV, can it be downloaded in Excel format, or even downloaded at all?

Harness design and tool quality drive a large quality difference in AI chat products. One obvious example is the difference in image generation quality from different products over the last few years—not only in quality but also in the ability to edit previous images. A harness makes or breaks an AI product.

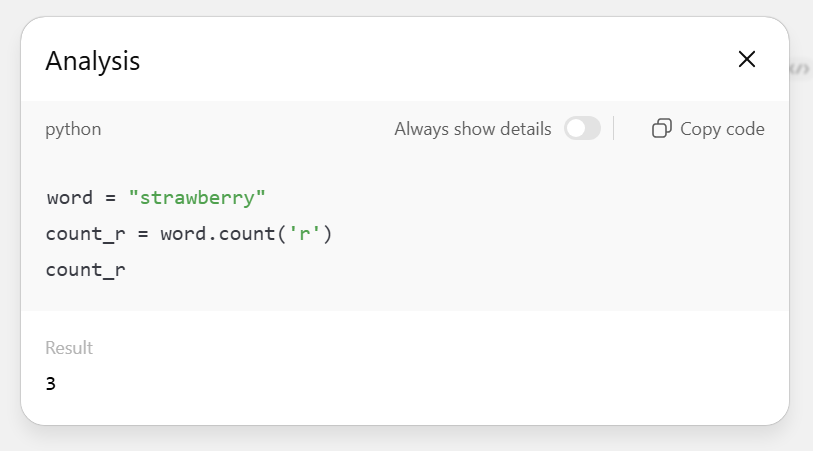

Special harness tool: code interpreter

It’s worth calling out the ability to author and then execute code as a unique feature of a good AI harness. Instead of writing a tool name and parameters for the harness to execute, the model writes programs complete code. Here, the harness executes that code—in a safe sandbox—and returns the result to the model.

Executing code is simple and very powerful.

One benefit of executing code is that LLMs are excellent at authoring it. They’re even better at code than they are at natural language! More significantly, this is how AI products get past their shortcomings like spelling and arithmetic.

Not only will code interpreter always figure out the number of ‘r’s in “strawberry”, it will also perform mathematics far beyond human ability.

The Application

The orchestrator and the harness together define the core of the AI chat quality. There’s still a lot more that needs to be done to match ChatGPT, Gemini, Grok, or Copilot.

Of course, a modern application requires the standard set of modern application necessities: login screens, settings, system telemetry, usage limits, and so on.

Beyond those, the industry is still figuring out how to help users get the most out of the product. The only AI-specific feature included in the first version of ChatGPT was the ability to start a new conversation. It was months later that you could navigate to a previous conversation; in the meantime, conversations disappeared when you did.

Now that every AI chat product displays conversation history, we’re seeing convergence of other features as well. Many AI products have a way to select different models. Most have modifiers on or near the chatbox, such as to enable reasoning or image generation tools. Even M365 Copilot’s @-mention feature of agents was adapted into ChatGPT apps.

We have come a long way from ChatGPT’s original idea of of “chatting with a model.” Yet we’re still in the early days of AI chat applications, and the same is true for orchestration and harnesses. Improvements in these areas are just as important as improvements in model quality.

So when you’re comparing AI chat products, don’t get distracted by incremental benchmark gains. That barely matter anymore. Instead, look at how the AI is orchestrated, how well it harnesses tools, and what the application actually empowered you to do.