What AI looks like internally

The mission of OpenAI and many other researchers is to develop Artificial General Intelligence (AGI). Definitions vary, but AGI usually means something like being able to do any intelligence task as well as humans. Individual research teams are working to make AI medical diagnosticians, scientific researchers, therapists, and any other professional you can think of.

This isn’t a new objective, but the approach used recently is new. Researchers used to take a narrow task, collect examples of that task, and train a custom AI to do only that task. Big Blue that defeated Kasparov at chess, the Facebook relevance feed, Google Translate, and any other AI prior to 2021 was developed specifically for that individual task. I’ve worked on many of these narrow AI features. This is being replaced by training one AI that can do most things.

Ever since GPT-3 was shown to be instructable – can be given a task through sending it a chat message – a different approach has taken over. Most AI research now is about training single models that are more and more capable at every task. The same GPT-4 model can now be a diagnostician, researcher, therapist, and more. We no longer need to train individual AI models for individual tasks; a single Large Language Model (LLM) seems to be able to do nearly any of them.

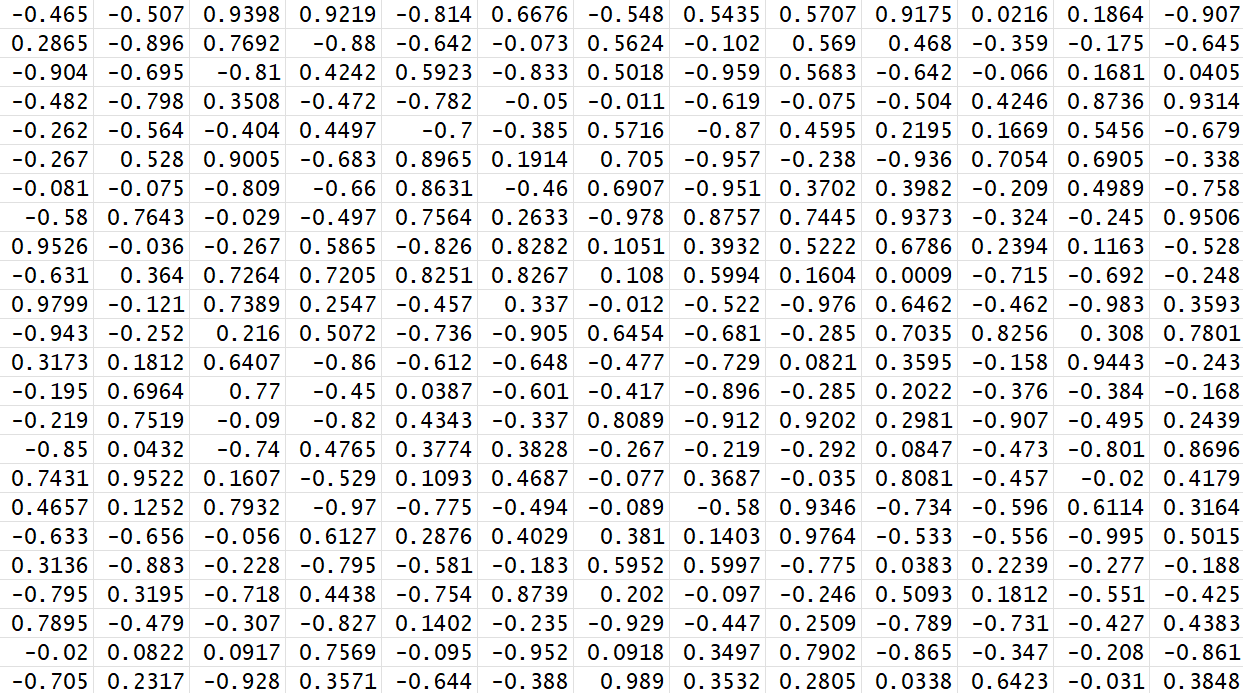

An LLM consists of the Transformers algorithm and a matrix of numbers. The basic Transformers inference algorithm can be implemented in just 50 lines of code. Meanwhile, the matrix consists of 1 trillion or more numbers. Somewhere in those trillion numbers is a diagnosis for your medical symptoms and advice on being happier. I believe it also contains new scientific breakthroughs.

New Knowledge?

I argue that the matrix of numbers can create new knowledge, even though it was created from existing human knowledge. This is because no one person knows everything, much of science is recombining existing work, and it turns out these AIs can reason.

As the matrix of numbers becomes larger, it contains an increasing fraction of the world’s important knowledge. All scientific research, all books, pop culture trivia, and more are represented in these numbers. It may not know what I had for breakfast this morning, but it does know about my work with Copilot for Microsoft 365, and even anything interesting that I write on this blog.

That knowledge can be recombined in new ways to create new insights. As an example of combining existing knowledge to create new knowledge, consider Behavioral Economics. It’s a hot scientific field right now, as a combination of psychology and economics. It was created as a collaboration between Daniel Kahneman and Amos Traversky’s knowledge of psychology and Richard Thaler’s knowledge of economics. Applying this combination has led to many breakthroughs, even though both fields were established already. There’s much more opportunity to do this. New scientific knowledge also needs to be proven, however. Although models cannot themselves effectuate real world experiments, it turns out that models can simulate human behavioral experiments with fair results. I believe upcoming AI will help us identify new combinations of knowledge that have important results in the world.

Finally, researchers discovered that when you ask a language model to output its “thinking,” it can create and follow new reasoning. The building blocks of reasoning come from human logic, but there’s always new questions to reason over. If a language model can reason new thoughts on any question, building from the sum of useful human knowledge, define experiments (and sometimes run them), it’s performing science and creating brand new knowledge.

The Final Invention

We may still need humans to identify the problems to solve, but a sufficiently advanced AI will be able to write the answer or design the machine that performs the work. An AI that can do this is then the last thing we need to invent; we would call it an Artificial General Intelligence. Quite recently, it seems we know the shape this AGI may take.

The final invention we need is a giant matrix of numbers, maybe 10 million or more to a side. All that’s left to do is figure out what order to arrange those numbers in! It’s a hard problem, but we are rocketing towards this result. We won’t know ourselves what any individual numbers mean, but in combination, these numbers can answer any question we have as a species.