A favorite pastime on any social media site is complaining about how bad its search engine is. Whether it’s Reddit, Twitter/X, LinkedIn, or other social media empire (Facebook users don’t even try), searching for that post you just saw or even made yourself fails more often than it succeeds. I’m sure you’ve seen this yourself – whenever you try to find that post you saw yesterday, it’s like it vanished into the depths of a black hole.

But these companies famously have armies of data scientists, with teams dedicated entirely to search. What gives?

We have a high bar for search because of Google and Bing web search, yet social media search is harder for three reasons:

- There’s too much data

- It’s personalized

- Evaluation is nearly impossible.

Let me explain, and also describe how I made a better search engine than xAI’s army of data scientists.

There’s too much data

Imagine 10,000 people. How many of this people do you think are web developers and web designers. Maybe 100? And how many web pages do you think each creates each day? I’d suggest an average project of 10 pages takes 1 month. Multiplying those figures gives us 1,000 new web pages created per month for every 10,000 people.

And how many of 10,000 people post on social media? Let’s conservatively say that half post every other day, or 15 times per month. Those same 10,000 people would create 75,000 new social media posts, 75x the number of other web pages. Right off the bat, social media search is 75 times harder than internet search. But it quickly gets worse.

It’s personalized

Websites are groups of web pages, logically organized by topic and with hyperlinks between related information. As long as a web search engine gets you to the right vicinity, you can find the precise details you need. Of course, social media posts are nothing like this. They are grouped by user, yes, but generally not by topic. A search engine must show you precisely the post you are looking for in order to have a success.

Not to mention that if two different users enter the same query, they should get different results. This is partially because different users are connected to different users, but also because we expect results to be personalized to us. When we search social media, it is often because there was a particular post we saw that we want to find again. That means the search engine has to keep track of every post you have seen, separate from every post any other user has seen.

Instead of one index of the entire internet, social media has to deal with hundreds of millions of separate indexes. And these indexes grow much faster in aggregate than the index of the internet. Compounding the issue, data scientists’ hands are tied when using their primary tool: evaluation.

Evaluation is nearly impossible

The general way applied data science works is to identify a goal, prepare the right data, experiment with a new algorithm or parameter, and evaluate the results against the goal. Social media search makes this very difficult.

For a general web search engine like Bing or Google, scientists can identify the thousand most frequent queries. Then they can set a goal to improve the results for these queries. A common goal is that the link users end up clicking on is shown first. When they run an experiment, they can instantly evaluate whether the “correct” link would be shown first for each popular query.

But there are no most common queries for social media. And the few that are repeated between different users need to show new results every few minutes because of how frequently social media updates! Worse still, every user needs to get different results! So instead of a fairly clean goal like top web searches, we have constantly changing, different queries that show different results when repeated anyway.

Experiments on social media search can still set a goal, like whether arbitrary searches have the result the user ended up clicking on in the top position. But it is much harder to build intuition about what might improve arbitrary queries from arbitrary users at an arbitrary time than this particular query on a relatively static index. Data scientists working on social media search are incredibly talented and can work through many of these issues over time, but it will always be harder than web search.

Beating xAI at their own search

And yet, in two hours with the help of a chatbot I created a search engine for X/Twitter better than the one actually implemented on the website. No, I didn’t solve all of these difficult problems, I just removed them for my particular scenario.

Most importantly, I scoped down to one user’s data, fixed it in time, and only considered what one user should get as results. In other words, I built a search only over an archive of my own tweets. The amount of data was relatively small, it wouldn’t update, and I knew the queries I wanted to use. There’s no way this would work in production, but it works great for me.

Development details

I already had an ElasticSearch server set up, for The Archive. It supports both lexical search (using the same words) and semantic search (using the same meaning). I was optimistic that semantic search would work particularly well for searching tweets, as each tweet is short and focused on one topic. I asked Copilot Think Deeper to write a Python script to index my tweet archive with Elastic Search.

I'd like to load an archive of my historical tweets into Elastic Search. I want a setup file that takes the JSON document, calculates embeddings, creates two indexes, loads the text into the lexical index, and loads the embeddings into the semantic index. Leave placeholders for the embedding model path. The lexical index should have the tweet full text obviously, the mentioned user names and handles, the time it was posted, whether there was an image, and keep the link to the tweet online. The embedding index should be based only on the full text, with one embedding calculated for the whole tweet.

(json example)I did have to do some debugging on this one. I had to update the transformers and accelerate packages, add some code to create the indexes before trying to insert data, and correct its usage of the bulk() method of the ElasticSearch library. Then I had Copilot to create a second script that would search exactly how I wanted to use it. I wanted to run both a lexical and semantic search, drop all the results into the context of an LLM, and ask it to re-rank the results.

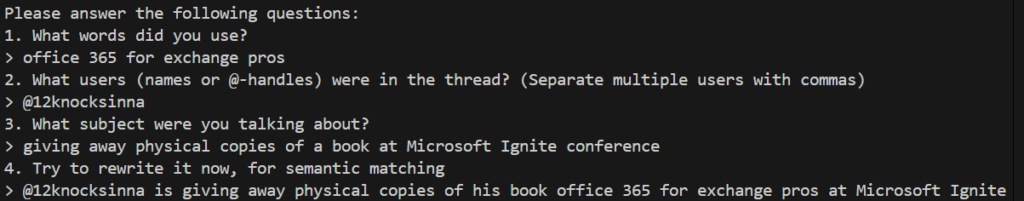

create another python file that is a commandline to perform a search on these two indexes. It should ask the user a few questions, get the top few results from a few different queries, and put them into context for a local LLM to choose the best tweets, with the text and URL. These are the questions to ask: 1. What words did you use? 2. What users (names or @-handles) were in the thread? 3. What subject were you talking about? 4. Try to rewrite it now, for semantic matching

These should result in 3 searches to the ES database: 1. Lexical search on the words, along with any users mentioned 2. Semantic search on the subject 3. Semantic search on the rewriteI had to do a little more to get it to implement the LLM call using my preferred local LLM hosting method (Langchain with LlamaCPP).

Results

And yes, it works great! Tweets I could not find through x.com were showing up, usually first, in my search results.

You can have the code I used if you’d like it: Turns a privacy export of X/Twitter tweets into a hybrid lexical/semantic search engine · GitHub. Beware, you’ll need to do some configuration and setup, modifying the code yourself. But when you do, you’ll have sidestepped all the reasons social media search is so bad, creating your own search engine that works great, just for you.